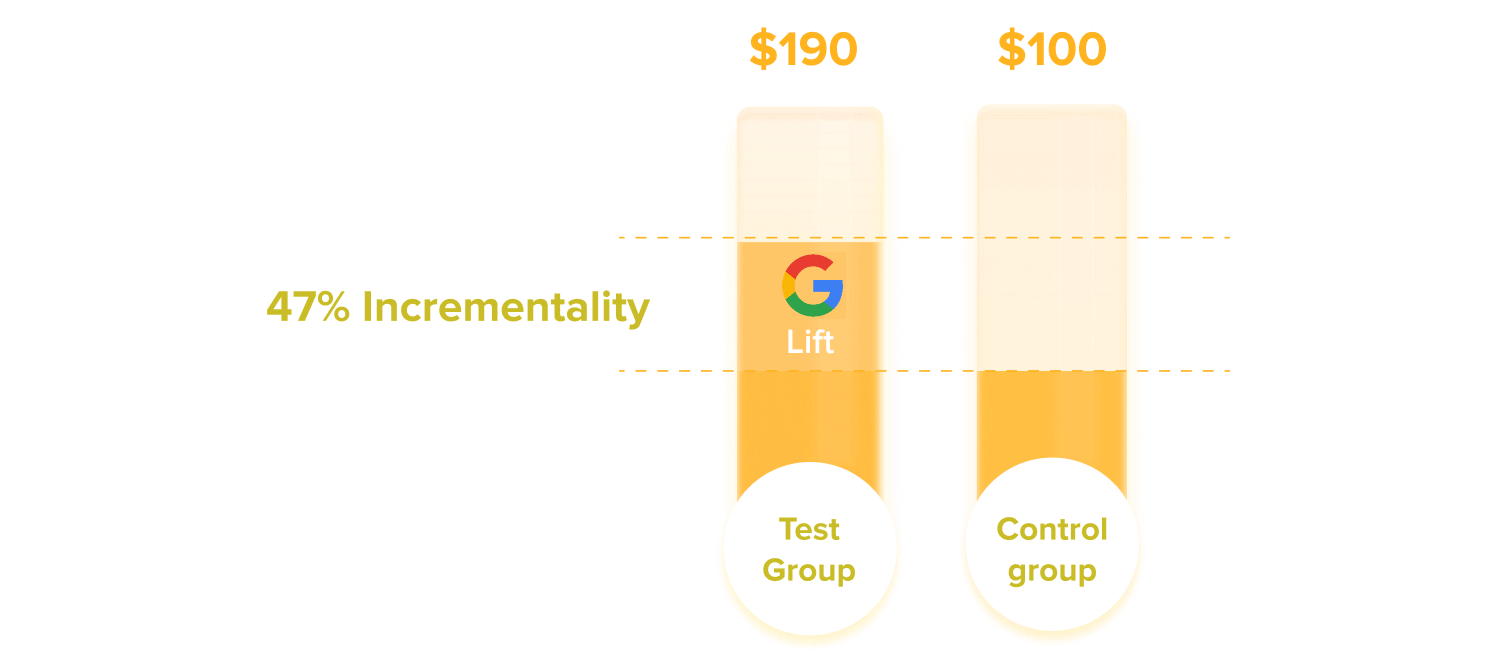

Disclaimer: I am about to use incrementality, A/B testing, causal effect, and similar terms. All of those in a nutshell represent the motion when we measure the true impact of advertising by comparing a test group (ads turned off) to a control group (ads running as usual).

Incrementality testing is a powerful tool for marketers to measure the true impact of their paid search campaigns. While working at eBay, overspending on paid performance channels was a constant concern and also one of the biggest headaches. But ultimately, we all became way more sophisticated in measuring incrementality to mitigate cannibalization with our SEO traffic. This is a summary of my experience working on paid search at eBay, Amazon and Brex. I won’t be covering the day-to-day paid search optimization process but my goal is rather to highlight a few crucial elements of incrementality testing in search at deeper level.

Another important note: Running incrementality tests is definitely not free - the opportunity cost for the dollars, talent and time needed to properly conduct it can be significant. Hence, this is not something teams with smaller budgets can run since you will need fairly large sample sizes and conversion volume for a solid analysis. This particularly applies to B2B businesses with large marketing budgets but more limited exposure on paid search.

How does it look like in paid search and why should you care?

The basic concept of an incrementality test is the same as in any channel: We can assess the causal effect of suppressing ads and make data-driven decisions about the marketing spend. The main difference in paid search is the test design. A well-controlled A/B test design for Google is using IP-based or geo-based targeting, instead of names cookies, named users, or device IDs like on almost any other paid channels.

When I worked in the growth team at eBay, we ran incrementality tests regularly, sometimes twice a year. This became crucial for optimizing paid search efforts and avoiding overspending based on inflated attribution values. By understanding the true incrementality of paid search, we could derive incrementality factors and therefore allocate our budgets more effectively and drive better results.

Designing an effective test for Google paid search

To accurately measure the incrementality of paid search campaigns, it’s essential to design a well-controlled A/B test. One approach is to use a hybrid Geo + User design, which involves turning off paid search for a specific portion of the audience based on location or IP address. There are two approaches based on in which markets you are running ads in:

IP-based

In an IP-based test, ads are suppressed for a select set of IP addresses. For example, all IPs ending with 3 (“IP-3”) would have ads turned off, while IP-0 to IP-9, excluding IP-3, would have ads running as usual. Using data from the pre-period (say 12 to 18-month lookback), a statistical model is trained to predict what IP-3’s performance would have been in the test period (or post-period) had the test not happened. The difference in performance between the actual (ads OFF) and the modeled prediction (what if test was ON) is used to measure the incremental lift.

Geo-based

For a more granular market-based approach, geo-based testing can be employed. In the US, this can be done using Nielsen’s Designated Market Areas (DMAs). Essentially, it is groups of counties and zip codes where local TV viewing is measured decades now. By turning ads off in certain DMAs and comparing them with “similar” regions where campaigns continue to run, we can measure the lift.

There are two methods for constructing geo-based test and control groups:

- DMA Matching: Select a set of DMAs to turn ads off and create a comparable set of DMAs where campaigns continue to run as usual. Matching is done based on historical data and across relevant metrics, usually looking back 12-18 months and accounting for a good geographical spread.

- Synthetic Control: Similar to the IP-based test, a candidate set of DMAs is identified to turn ads off, and a statistical model is trained using the pre-test period to determine the counterfactual (test group performance in the absence of the intervention).

For markets outside the US, a geo-test based on postal codes can be used, where a number of postal codes are grouped together. Although this can be a cumbersome exercise and can skew results if you are not able to “hash” a homogeneous test and control populations. While working at eBay, geo-based testing was one of the biggest headaches but we became way more sophisticated in measuring incrementality.

One of my favorite projects was setting up a long-running A/B test across major paid channels and then cross-checking the results with our attribution models. The amount of marketing budget that got optimized this way as significant.

Some key learnings to consider before you start

- The incremental sales were always smaller than our our internal attribution model or what Google thought it drove. If you can, you should conduct your own tests to measure true incrementality. But work with a platform partner or your data scientist to design it.

- Paid search is an important source for acquiring new users in any growth team I worked at, with the incremental new user acquisition lift often higher than the immediate sales lift that we measured.

- But… Ad spend needs to reach a certain threshold to enable overall paid search effectiveness. Increasing spend beyond that threshold could lead to cannibalization of other paid/organic channels, i.e. diminishing returns. Read the eBay studies I linked below.

- eBay’s research team also ran extensive studies which were somewhat more controversial. It revealed several important insights about the true impact of paid search campaigns at high scale at the time. Some of it was later refuted in our subsequent testing on the field so take it with a grain of salt.

Any paid search specialist will tell you that the ecommerce market is a highly competitive - and it is largely a pay-to-play landscape. But it is possible to extend the effectiveness of paid search campaigns by conducting regular incrementality tests. If you are interested to go deeper, feel free to reach out or check out the papers below:

- Computational Causal Inference - Introduces computational causal inference as an interdisciplinary field and discusses opportunities for scalability and open challenges.

- The Seasonality Of Paid Search Effectiveness From A Long Running Field Test - Details eBay’s extensive field test measuring the incremental impact of paid search campaigns and the seasonality of effectiveness.

- Did eBay Just Prove That Paid Search Ads Don’t Work? - Harvard Business Review article discussing eBay’s long-running A/B test on paid search effectiveness.

Thang Doan is a seasoned growth marketing leader with over 12 years of experience driving results through paid channels, data-driven experimentation, and marketing technology across ecommerce, fintech, software, and AI industries in both B2C and B2B settings. His expertise lies in developing growth strategies and high-volume testing frameworks for user acquisition and engagement, leveraging his deep understanding of experimentation, ad tech infrastructure, and AI automations to drive market leadership.

Feel free to reach out to me to discuss these or other strategies for maximizing ROI in your marketing programs: Connect on LinkedIn